An Araucaria tree asset pack for the SpeedTree library. The models needed to have appropriate versions for both VFX applications, with a high level of detail, and games, with constraints on polygon count and texture usage.

As 3D Modeler, I created the tree models from scratch. From scanning real trees for meshes and materials to setting up a showcase scene in Unreal Engine 4.

Project featured on:

Araucaria angustifolia (Pinheiro do Paraná) is a species of pine that has lived on Earth for 200 millions years. Each individual can live up to 450 years and reach up to 45 meters in height. It can be found in South America, mainly in the southern region of Brazil. They are currently at risk of extinction. Image rendered with Unreal Engine 4.

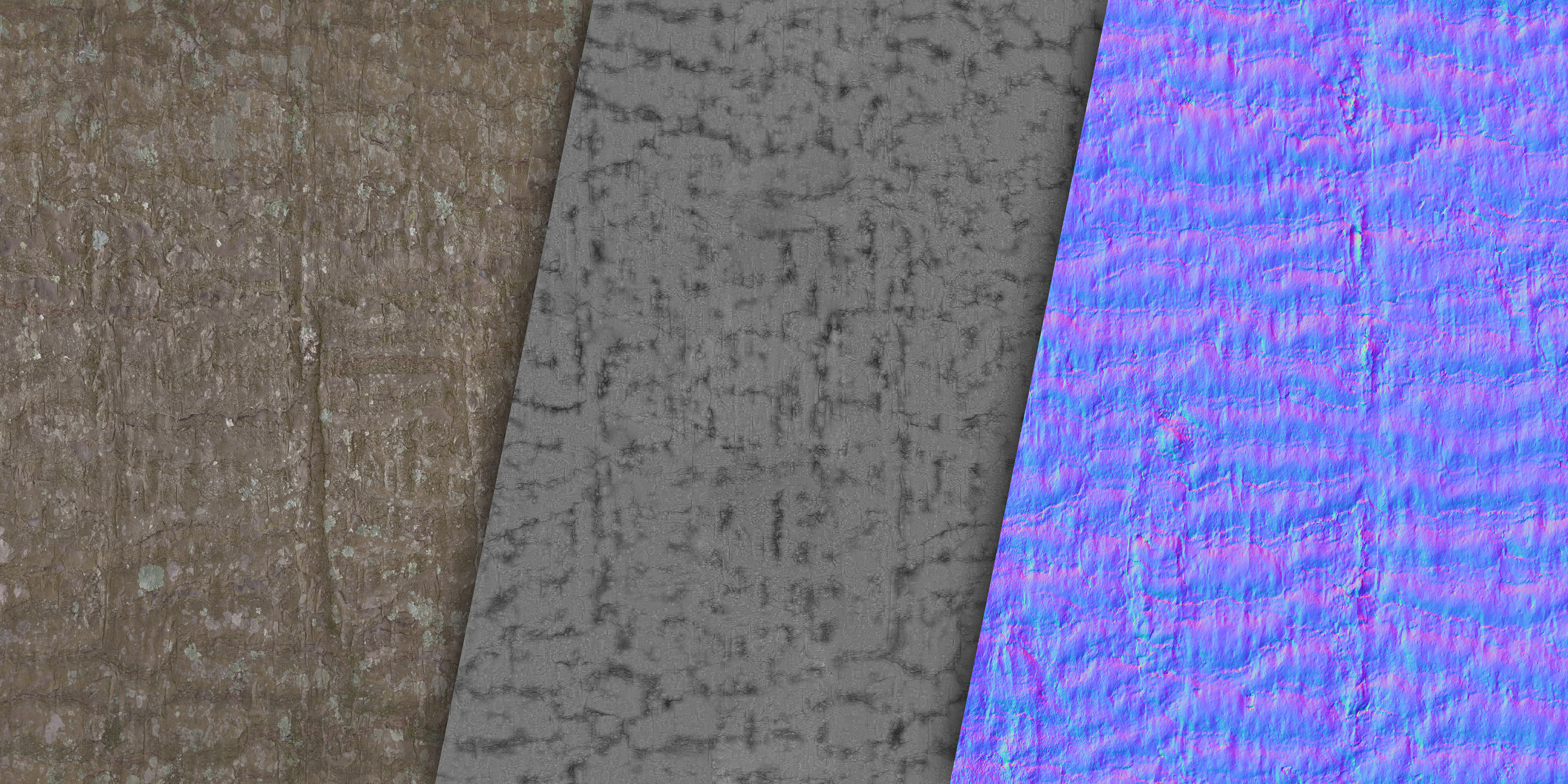

I created the bark materials from photogrammetry scans of a real tree using RealityCapture.

The high-poly model was subsequently cleaned and prepared in 3DS Max for baking into PBR textures using xNormal.

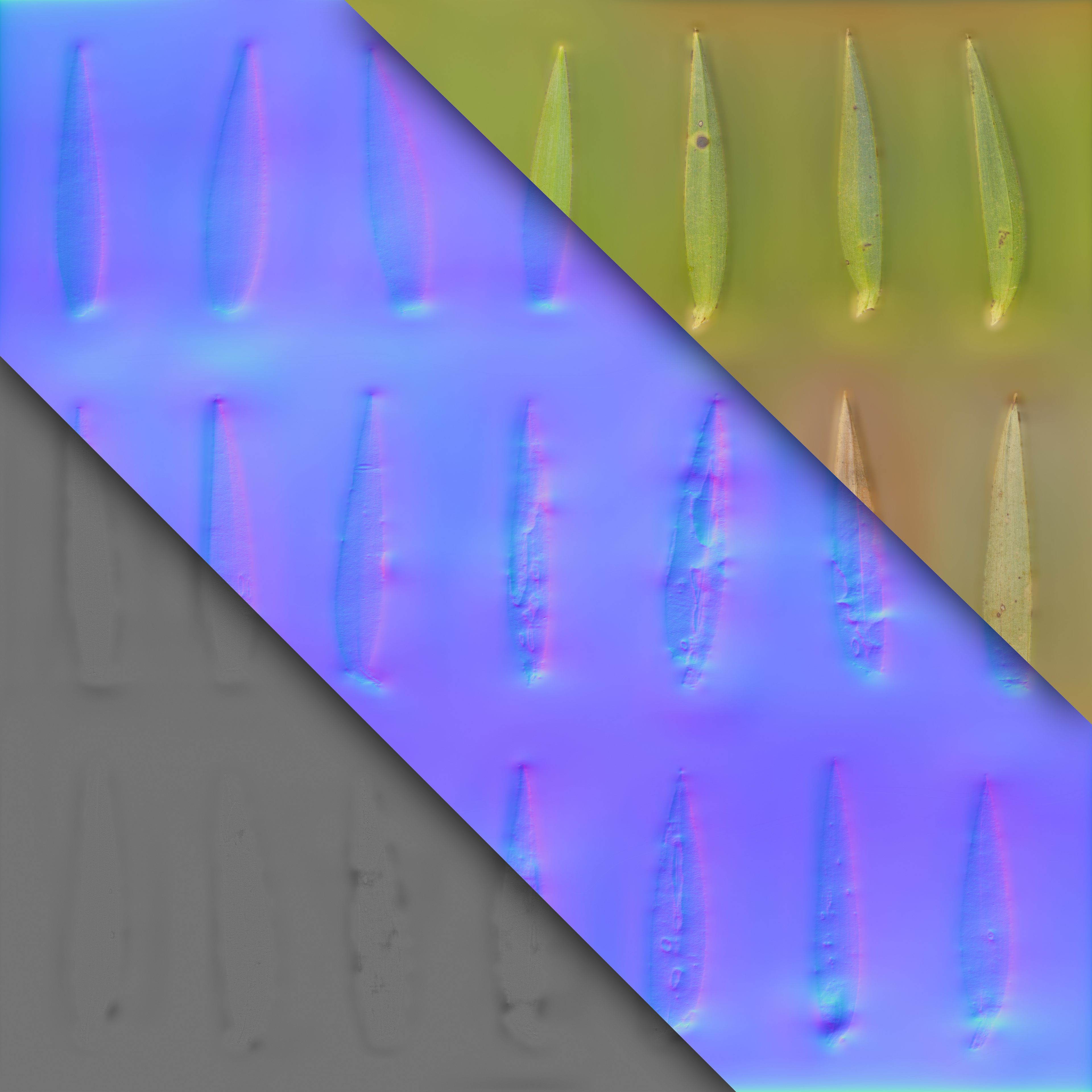

The leaves were sourced from a single photograph of samples on a black cardboard background.

I processed those images in Photoshop and then utilized them to generate PBR textures with Substance Bitmap2Material.

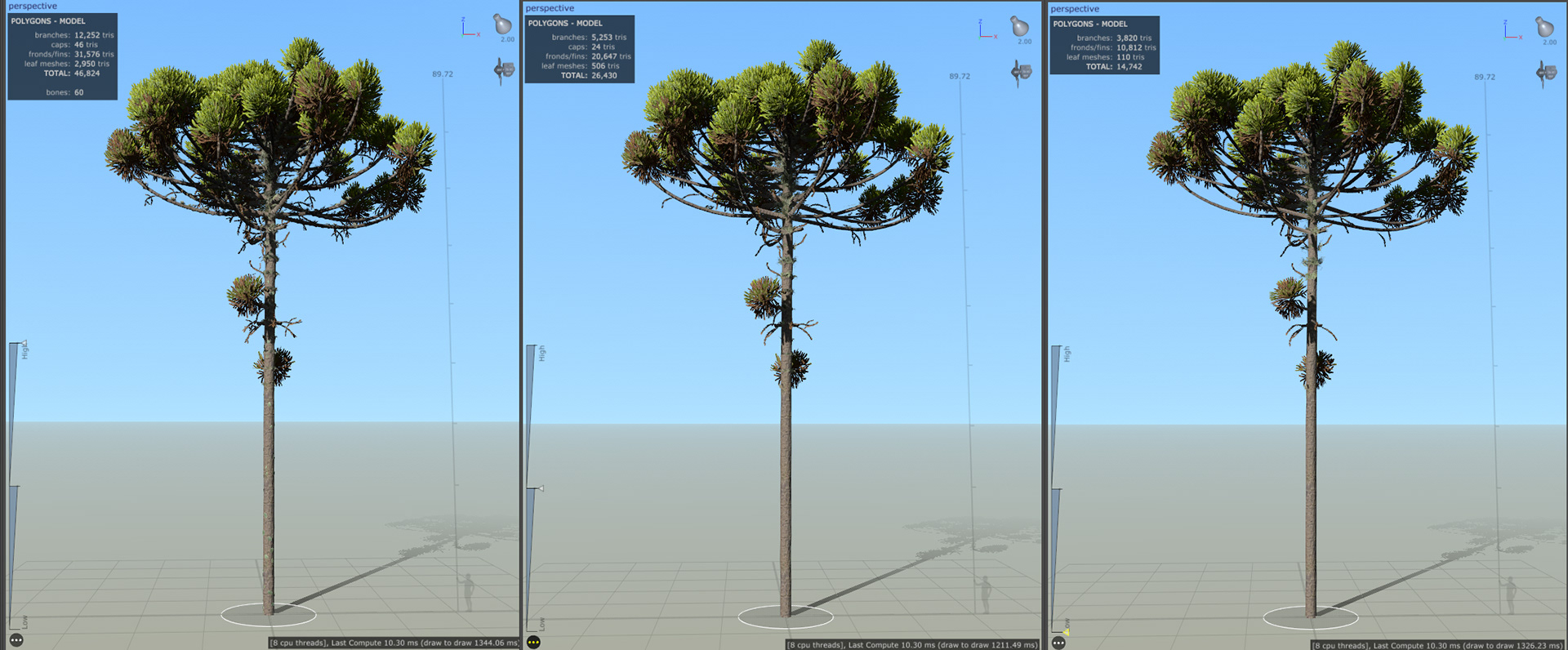

Procedural graph and meshes of the model. Game version on the first two. VFX version on the third.

The VFX version was modeled first. It used high-poly meshes shaped by displacement tilled textures generated from the photogrammetry scans. This approach ensured the accurate replication of the species characteristic lumpy trunks. From around 1.2 million triangles, the VFX model was then optimized to a 50k tringles budget for the Game version.

Albedo - Opacity - Normal Map - Ambient Occlusion

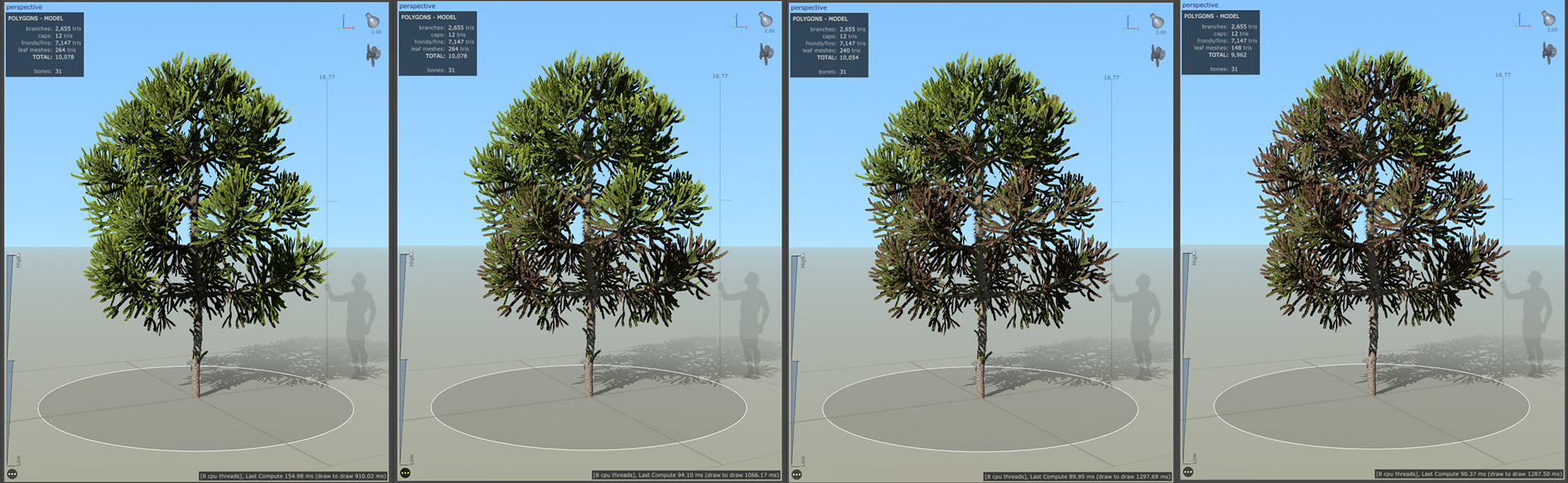

While the high-poly model could afford individually modeled clusters of leaflets, the Game version required a group of planes with baked atlases to reproduce branches with only a few triangles.

That approach also requires bent vertex normals on cluster meshes so they react with light as a uniform rounded shape group of leaflets from certain distance. It helps hiding the low-poly characteristics of the model.

The downside of this technique is that it requires the material shader in game engine to skip any backside fliped-normals procedure and it breaks the PBR precision, specially for subsurface effects.

Game Model: Hero (47k) - Desktop (26k) - Mobile (15k)

Game Model: Different season variations

Photos of real Araucárias used as reference

The showcase scene featured in the images below was crafted with Unreal Engine 4 and included additional foliage assets that were also created using a similar workflow with the SpeedTree Modeler. All the models are in their Game versions.

The lighting setup is fully dynamic. It is based on dynamic Direct Light, Sky Light and Sky Atmosphere actor. There is no Global Illumination, only Distance Field Ambient Occlusion.

The background elements are formed by low-poly scanned building models and satellite imagery from several sources. There was no need of high fidelity assets in there since that region is always out of focus.

Credits:

Guilherme Rabello (rabellogp.com)