A collection of characters from the Cornerstone metaverse required model creation in UE5, with 5,000 variations rendered in high quality images for NFT release.

As Senior Tech Lead of the Cornerstone team, I created tools that empowered the art team to create Companions with a wide array of traits, examine them in different settings, maintain visual quality standards, synchronize data with an external database, and automate the rendering process of a large batch of assets.

Overview of Companions NFT in OpenSea platform

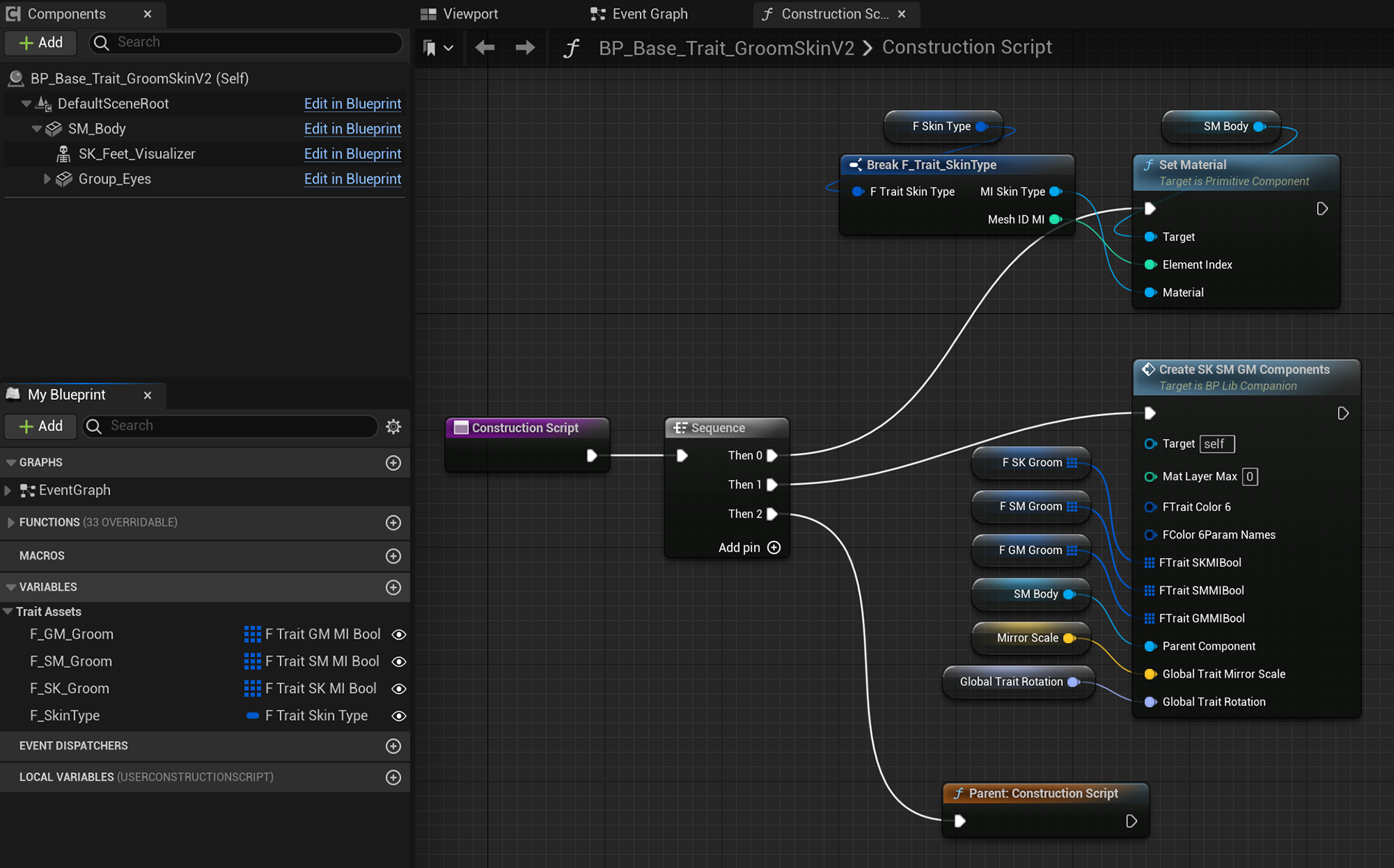

Companion Generator Blueprint

The Companions are constructed from a shared set of body and eye meshes, along with several variable components that are also reflected in the final NFT traits: Color, Creature Feature, Creature Type, Expression, Eye, Noza Touch, Skin, and Tail. From an engine perspective, these components can be composed of one or more assets of various types. For instance, the Skin component might consist of a Material Instance applied to the body, along with several Groom and/or Mesh assets layered on top of it.

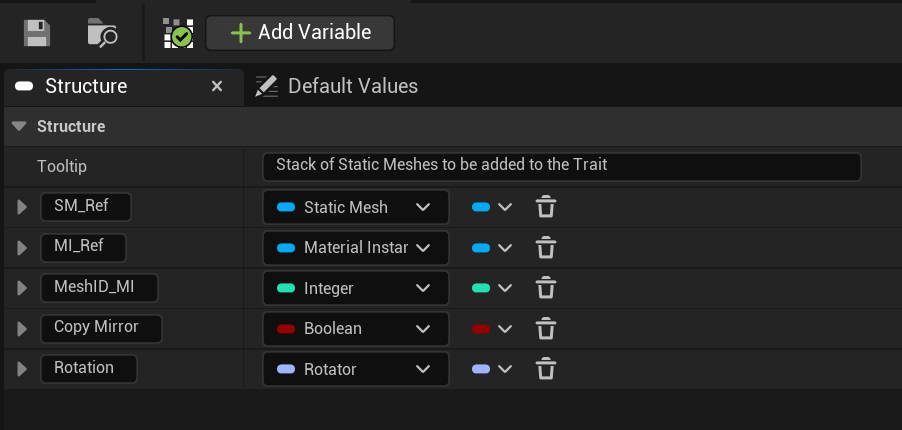

To accommodate this structure, I designed a Base Trait Blueprint featuring body and eye components solely for displaying features in asset thumbnails. Subsequently, I established child Base Blueprints for each type of Trait. Each of these child Blueprints included variable arrays of Structures comprising Static Meshes, Groom, Skeletal Meshes, and any other asset types compatible with that particular trait.

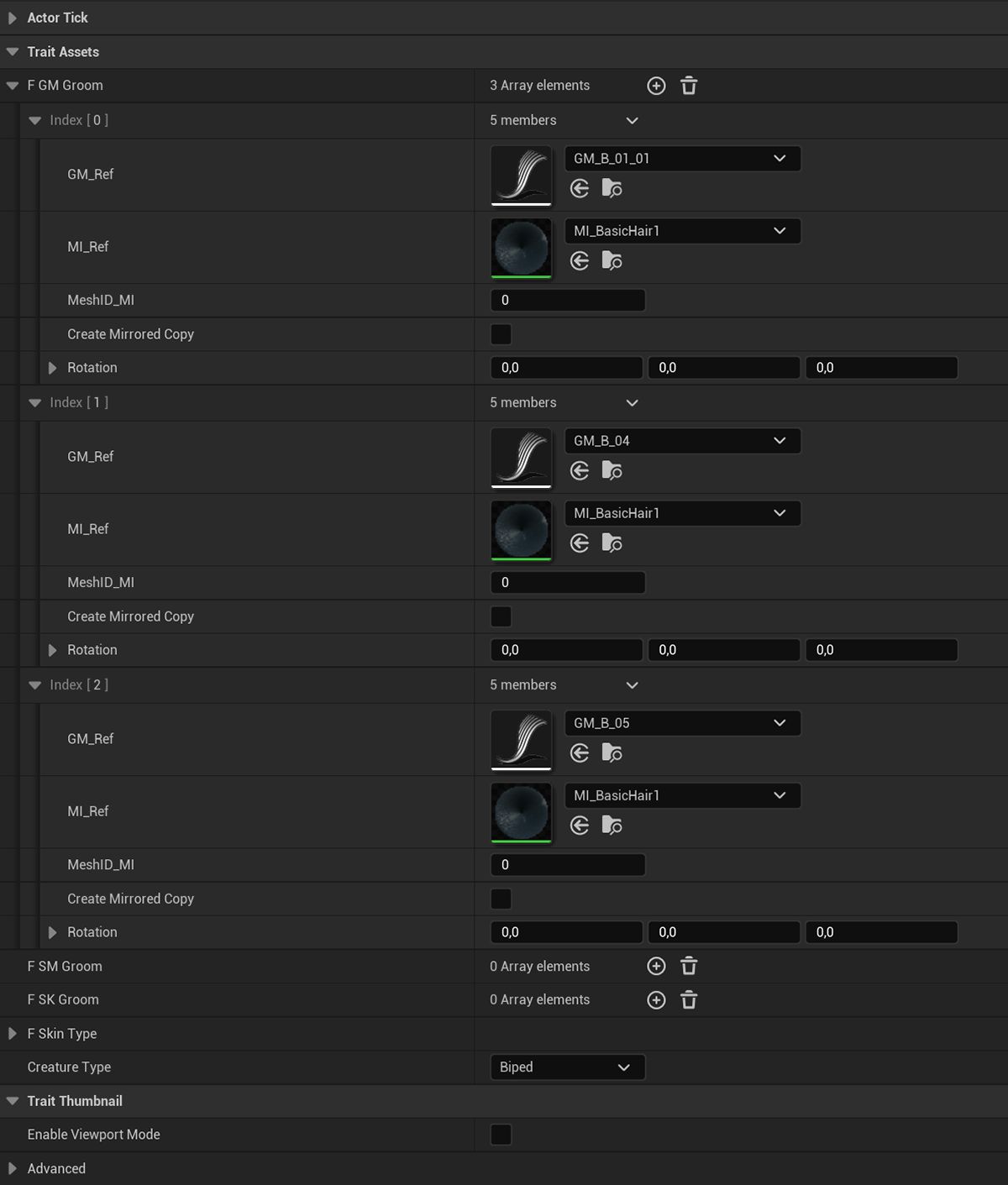

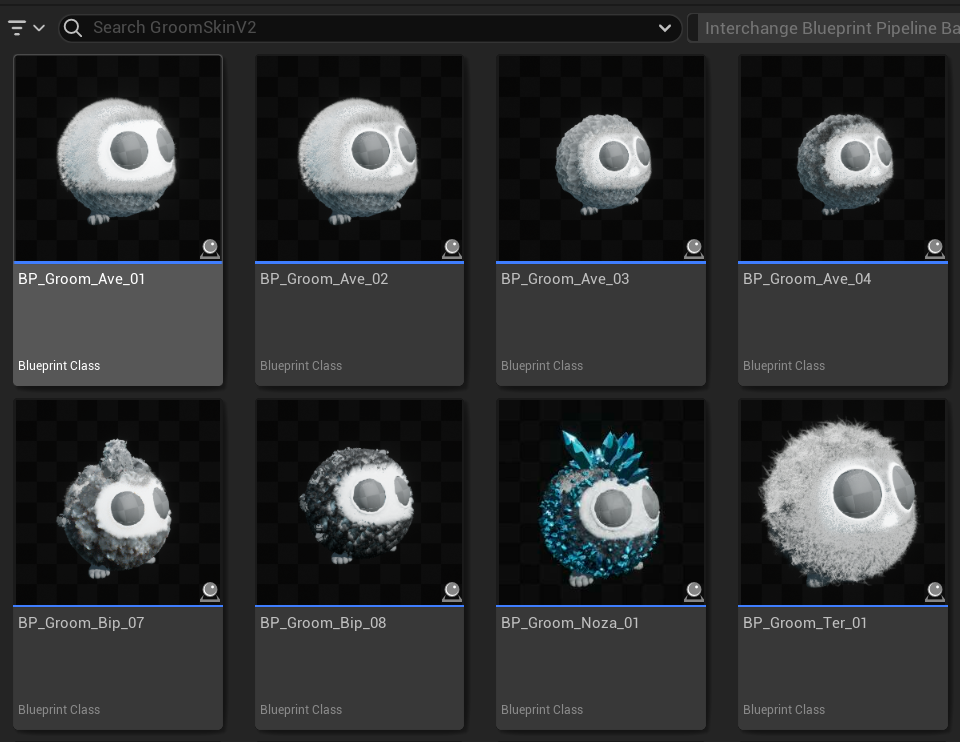

As an example, to generate new Skin Traits, artists would create a child of the Base Skin Trait Blueprint and open it. The "data only" view of the blueprint already allowed them to include the compatible types of structures and reference the desired assets. This new Skin Trait would then become visible in the content browser, complete with appropriate thumbnails for rapid visual identification.

Overview of Base Skin Trait Blueprint with compatible variables and functions to assembly the thumbnail preview.

Example of Static Mesh structure with all the related parameters the artists could also customize.

Example of a child Skin Trait Blueprint configured with references to assets.

Content browser view of Skin Traits created with the blueprint.

Example of Companion generated by a combination of Traits.

Subsequently, a Companion Generator Blueprint was crafted, following the same model as the Trait Blueprints. However, instead of featuring variables of specific structure types, it contains variables representing all the Trait Blueprint types that constitute a Companion. In the construction script, it processes these Trait variables and assembles a complete Companion, ensuring all parameters and interrelated Traits are appropriately incorporated. For instance, Color may influence Skin and Tail, and this relationship is managed during the assembly process.

By placing an instance of this blueprint in the level, artists could effortlessly reference the Traits they desired and immediately visualize the Companion in the viewport. Traits were easily located in the content browser using the search icon. Furthermore, by generating a child blueprint from that instance, they could save a variation of the Companion for future use.

The blueprint also included various parameters for fine-tuning the overall appearance of the Companion, including "look-at" functions for the eyes, individual eye and eyelid controls, and more, to facilitate adjustments for screenshot purposes.

Manual creation of thousands of Companions was clearly not a feasible option. The goal was to establish a synchronization mechanism between the editor and an external database. This would enable the generation of Trait combinations by a separate team working on the NFT project, and then the data could be imported into the engine to automatically assemble Companions based on unique IDs.

To achieve this, I established a workflow centered around Data Table (DT) assets. A separate Data Table was created for each Trait type and filled with the options selected by the artists. These tables could be exported as CSV or JSON files and processed by the NFTs team to generate the appropriate Trait combinations that form Companions. This data was then organized into a Companion spreadsheet, where each row represented a Companion ID and included references to each Trait that composed it.

Subsequently, this spreadsheet was imported into Unreal Engine as a Companion Data Table. With this setup, the Companion Generator Blueprint was fully equipped to read the data and locate any row based on the Companion ID, thus enabling the automatic assembly of Companions.

Example of Companion Data Table with ID and references to Trait Blueprints.

Automatic Render Script

Each type of Companion needed to be rendered in a different environment: Aquatic, Ave, Biped and Terrestrial. To automate the render process of the 5000 Companions, I created a Master Level with those 4 environments as sublevels. Each sublevel had it's own Companion Blueprint, camera and lighting setup.

In the Master Level blueprint, I established the logic to load one of the sublevels, search the Companion Data Table for the first Companion that matched the correct type for that environment, load the associated Traits, and initiate the rendering process using the Movie Pipeline Runtime Subsystem. Artists had the flexibility to configure Movie Pipeline Primary Config and Sequence files specific to each sublevel for rendering.

Furthermore, I incorporated support for initialization arguments, including "start row," "end row," "companion type," and "output folder" values. This feature allowed the runtime master level to be launched from the command line, permitting the specification of intervals and types of companions to be rendered. Consequently, the rendering process could be distributed across multiple computers in the network, and specific renders could be updated later without the necessity of re-rendering the entire batch.

Credits:

Cornerstone Team from Zoan Group (cornerstone.land | zoan.com)